Humans understand the image and its content by merely looking at it. Machines do not work the same way. It needs something more tangible, organized to understand, and give output. Optical Character Recognition (OCR) is the process, which helps the computer to understand the images. It enables the computer to recognize car plates using a traffic camera. Ocr kicks in to convert handwritten documents into a digital copy. The primary objective is to makes it a lot easier and faster for some people to do their jobs.

The OCR technology allows extracting text from an image or a scanned document. It can reorganize and extract any handwritten or printed character from the image. Optical Character Recognition is the process that detects the text content on images and translates the images into encoded text that the computer can easily understand. It scans the image, text, and graphic elements and converts them into a bitmap, a black and white dots matrix.

The image gets pre-processed afterward, where the brightness and contrast are adjusted to improve the process’s precision. OCR is not 100% precise, as it needs user/programmer involvement to correct a few elements missed in the scanning process. Natural Language Processing (NLP) is used to achieve error correction.

Several tools support using OCR but most of the commercial OCR engines are paid or allow using limited functionality on the free trial version. The users can also utilize OCR technology to extract text and tables from the PDFs and extract text from many non-editable formats.

OCR tools are also beneficial for various fields. Following are some of such fields where OCR can help speed up the process:

Python is an efficient and easy-to-use programming language well known for image and text processing abilities. This language provides a huge number of libraries that can automatically complete most of the user tasks.

In python, Optical Character Recognition is achievable by using two different methods.

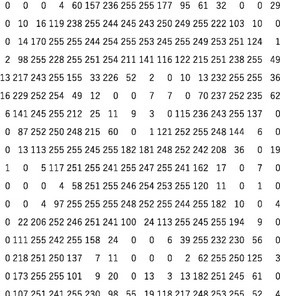

It is essential to comprehend how one reads and stores images on our machines before proceeding further—every Image forms by merging small but square boxes, known as pixels.

The computer saves images in the form of a matrix of different numbers. The dimension of the matrix depends on the number of pixels according to the picture. Let’s suppose the previous image’s dimensions are 280 x 200 or (h x w). The dimensions are the number of pixels an image consists of height and width. (Height x width)

Pixel values represent the brightness and intensity of the picture, ranges between 0 – 255. 0 illustrates the black color, and 255 denotes white, respectively.

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from skimage.io import imread, imshow

image = imread('MUFC.jpg')

Image.shape, image

imshow(image)

Image.shape () function extracts the matrix values of the image.

Imread () function reads the image from the path.

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from skimage.io import imread, imshow

image = imread('MUFC.jpg' as_gray = true)

Grey_image = image

imshow(grey_image)

Imread (destination, parameter) = as_gray is a sub-function that allows it to convert the picture in Black and White mode if the value is actual.

Tesseract library in python is an optical character recognition (OCR) tool. It helps recognize and read the text embedded in images. Tesseract works as a stand-alone script, as it supports all image types sustained by the Pillow and Leptonica libraries, including all formats as jpeg, png, gif, BMP, tiff, and others. PyTesseract works by converting the test and graphic elements of the scanned image into a bitmap. A bitmap contains white and black dots. The image goes through the pre-processing phase adjusting the brightness and contrast before data extraction and conversion. Suppose used as a script, PyTesseract prints the documented text instead of writing it to a file.

PyTesseract can be an OCR in Python or a library and a wrapper for Goggle’s Tesseract OCR Engine. It can wrap the Python code around Tesseract OCR and provide the capability to work with various software structures. The users can utilize various Python OCR libraries to complete different tasks. Following are some of the libraries that the developers can use with Tesseract:

The Tesseract OCR process typically consists of some basic steps. Following is the breakdown of the process in steps for better user understanding:

This Tesseract OCR process provides basic steps to provide an input image and get the output text. However, the users can use their customized solutions using different approaches and tools necessary for their use cases.

Python libraries are always the easiest to set up. It is usually the one-step if the user is aware of PIP. To use PyTesseract, the user needs two things:

Pip install PyTesseract

Create the directory and initiate the project.

$ mkdir ocr_server && cd ocr_server && pipenv install --two

The user creates a primary function, which takes input from the user as an image and returns it in the text form.

try:

from PIL import Image

except ImportError:

import Image

import pytesseract

def ocr_core(filename):

"""

This function will handle the core OCR processing of images.

"""

text = pytesseract.image_to_string(Image.open(filename))

return text

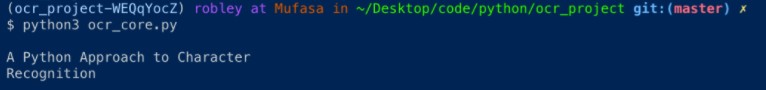

print(ocr_core('images/ocr_example_1.png'))

The function is quite simple, as in the initial five lines, the user is taking an image as an input from the Pillow library and PyTesseract library.

Attached picture in the code:

The user then creates an ocr_core function. It inputs a file name and returns the text contained in the image.

The result of this code is:

OCR script works 100% on the digital text because it was elementary since this is digital text, picture-perfect and precise, unlike handwriting.

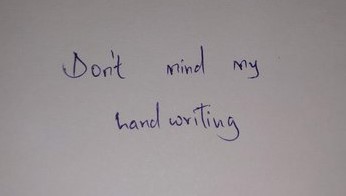

A handwritten note is input this time on the OCR PyTesseract. Let’s see the results.

Note:

Output:

The output of this note is as below:

Ad oviling writl

As it is evident that OCR may not entirely extract text from handwriting as it did with other images shown in the examples mentioned above.

The Tesseract engine may extract information about the orientation of the text in an image and variation. The orientation is a figure of the engine’s precision about the orientation identified to act as a guide. The script section represents the confidence marker also follows the writing system used in the text.

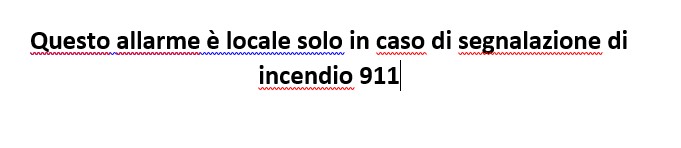

Tesseract allows the user to detect the language. It is a built-in function in the form of a flag.

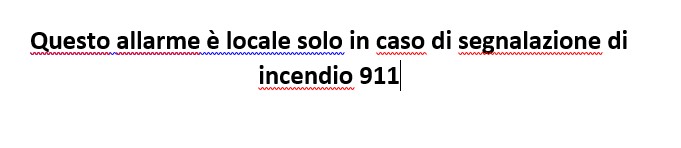

pytesseract.image_to_string(Image.open(filename), lang='ita')

Please refer to the below-mentioned example without the language flag:

Output:

Ques allarm e local solo in so seg one cend 911

Without the flag, it is reading the text in the English language and trying to decrypt it. Hence, it has shown the word’s output, which the compiler could extract in the English language.

Now, refer to the below-mentioned example without the language flag:

Output:

Questo allarme è locale solo in caso di segnalazione di incendio 911

Without the language flag (), the OCR script missed some Italian words. As after leading the flag, it was able to detect all the Italian content. The translation is not yet possible, but this is still notable. Tesseract’s official documentation includes the supported languages.

Tesseract is useful for many fields and tasks, as mentioned above. However, there are some limitations to the technology as well. Following are some of the limitations of the open-source solution:

Through Tesseract and the Python-Tesseract library, users have been able to photograph images and receive output in the form of text. It is Optical Character Recognition, and it can be of boundless use in many situations.

In the examples mentioned above, the user has built a scanner. It inputs an image, returns the text in the pictorial, and integrates it into an interface. It enables a user to render the functionality in a more familiar medium and in a way that can serve various individuals simultaneously.