Big dataset providers are now fantastically popular and growing exponentially every day. We’re going to evaluate a variety of datasets and Big Data providers ideal for machine learning and data mining research projects in order to illustrate the astonishing diversity of data freely available online today. We will also demonstrate a technique of machine learning with a code sample in Python which shows how to use one of the most popular Big Data hosts.

Along the way, we will see the datasets which reveal the complete human DNA sequence, and others which reveal the population demographics of entire countries. This is not only a list of 50 datasets; we are presenting 50 Big Data providers along with one remarkable dataset from each provider. Keep in mind that most of these providers host thousands of datasets. All of the items in our selection are currently maintained and updated; that’s one of the most important features contributing to the relevance and usefulness of a dataset. Let’s start by looking at cutting-edge deep learning datasets offered by providers such as AWS, Kaggle, and MS Azure, where we can find a rich variety of topics. Table 1. Shows a quick summary of the first five.

Last Modified: January 29, 2018, this amazing dataset contains five TB of fresh data collected by 300 scientists with the goal to “characterize the microbial communities inhabiting the human body and elucidate their role in human health and disease.” The target URL contains everything needed to explore the HMP, including the Chiron Dockers and other APIs and tools, most importantly the HMP Data Portal. Data features include microbial and human genome sequences. Methods of query include the AWS SDK and HTTP request.

OpenAQ features an introduction to BigQuery using Python with Pandas and BigQueryHelper by importing google.cloud, and includes a multitude of code examples demonstrating the use of Pandas to set up queries and create DataFrames for use in deep learning projects such as climate change forecasting. The OpenAQ link provides all resources needed to query their vast dataset for data on pollution and air quality on a global scale. Dataset features include air quality indexes and labels. Method of access is bigquery-public-data.openaq.[TABLENAME].

Rijksmuseum is an extraordinary dataset of 20,000 digitized paintings representing classical art such as the self-portrait of Van Gogh for use in image processing and computer vision. Training convolutional neural networks in image recognition is a targeted development for the curated collection. For a great intro to techniques of machine learning please have a look at our ByteScout tutorial including a TensorFlow starter.

Containing more than 4,000 potential data features and millions of samples, these time series indicators describe educational access, progress, and success, as well as literacy rates, teacher-student populations, and cost factors. The dataset covers the education cycle from pre-primary through tertiary education levels. The method of accessing this rich source of forecasting data is via API query or a direct file download.

Table 1. Summary of Top Big Data Providers

| Provider | Ex. Dataset Name | Description of Data |

| AWS | Microbiome | Human Microbiome Project Data Set |

| Kaggle | OpenAQ | Global Air Pollution Measurement, climate change forecasting |

| Rijksmuseum | Digitized paintings for image processing, computer vision | |

| MS Azure | NY Taxi | Taxi trip data, ML methods optimize routing |

| Elite | Global Education |

World Bank academic success forecasting |

Let’s take a moment to explain how to deal with massive public datasets. Usually, the first step in determining the viability of your project is to quickly ascertain the relevance and quality of the data. The source URLs provided here all contain info about the frequency of updates to the datasets and methods of accessing the data. Just click the main link over each numbered paragraph to go straight to a table of details and access instructions. Most datasets accept queries via API and offer direct download of CSV files as well. The easiest way to access the datasets is with a quick Python Pandas data frame. Many providers also extend downloads to JSON and XML formats. The best way to peruse a sample of any such dataset is to print a header with a number of records straight to your command line or perhaps to prototype it in a Jupyter Notebook. So, let’s have a look at how to actually open a CSV downloaded from a provider, and scan through several records to ensure relevance and integrity.

In this example, we will download a quick and easy dataset to illustrate basic points. First, let’s get an international currency exchange dataset in which we can compare currency rate changes for a period of one month. This type of data might be used to forecast future exchange values, or in a special case, it might be used to complete another dataset with missing elements. In fact, missing table data completion is one of the most popular practical applications of machine learning today.

Open the Bank of Canada site and download the CSV file here. Initially, we don’t know how many features and samples (or columns and rows) the file contains. But assuming that this is an ordinary comma-separated variables file, we can simply load it into a Pandas data frame and have a quick look at the header and a few rows of data like this:

# Quick CSV import and view header

import pandas as pd

dataframe = pd.read_csv("0117rates.csv")

print dataframe.head(5)

Here we import Pandas and load the data file into a data frame. I abbreviated the filename, the January 2017 exchange rates for selected countries, and the Canadian dollar. Running this brief script you will notice that the header contains some extra info that Pandas cannot sort out. Also, the data is really tab-separated rather than a comma-separated, and this is something you will encounter often: variations in file formatting. The easiest solution to reformat this dataset is to open it in your favorite editor and replace spaces with commas, and double quotes with nothing. Now it looks more like a CSV.

Next, note the series and label descriptions begin at A12. Loading this table without cleaning first will produce the error, “error tokenizing data” generated by Pandas, because it does not know what to do with all the extra text. The easy way to manage this is to create a second copy of the CSV and delete the extra header info. In fact, if you can recognize the country codes, go ahead and delete all the text above the column headers. Now run the above script again and you should see the clean column headers and the first five rows of data. For a complete introduction to techniques of machine learning and popular development platforms, please have a look at our ByteScout tutorial which includes a TensorFlow starter with MNIST image processing.

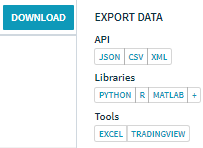

Quandl famously hosts rich and robust financial analysis and forecasting datasets for public access. In this example, USA gross domestic product change data are first displayed in the graph tab. After selecting the time params of your choice and examining the chart, click the table tab to see the download button and method of access options as shown in the figure at right. Quandl supports Excel’s external data source functions, along with API access via standard languages, including R which is especially suited for statistical methods.

Quandl famously hosts rich and robust financial analysis and forecasting datasets for public access. In this example, USA gross domestic product change data are first displayed in the graph tab. After selecting the time params of your choice and examining the chart, click the table tab to see the download button and method of access options as shown in the figure at right. Quandl supports Excel’s external data source functions, along with API access via standard languages, including R which is especially suited for statistical methods.

Every imaginable data feature derived from the United States census can be queried and downloaded through the Census data portal. As with Quandl and many providers, datasets may be chosen configured by time series, and then displayed for quick viewing right in the Census site. Once the desired data table is produced, it can be downloaded on the same page. US Census also offers a variety of query tools and API for developers and data mining projects.

Not merely a provider of datasets, this is a provider of providers. A truly massive and diverse array of additional providers (call “Resource Hubs”) and datasets is available here in a smooth and easy user interface which unites all. Follow the Ecosystem Resource Hub to the USGS BISON data source page. Here you will find all the APIs and feature references to mine the Biodiversity datasets made public by US Interior Dept. Several source-specific APIs are available. Agriculture, maritime, health, energy, and many more Resource Hubs are here. For example, Hourly energy demand data are constantly reported at the Energy Resource Hub. 249 individual datasets were listed for this Hub alone. Five data formats are listed for download options.

Socrata is a huge provider of datasets across hundreds of topics including business, finance, and management. Corporate branding data is a remarkable highlight at Socrata, which maintains a corpus of thousands of branches to potentially millions of individual datasets. The USA Starbucks franchise location dataset is a geographical map point reference with an API tool for query generation and HTTP requests.

Github repository supplies abundant datasets for NLP projects, including this Reuters news service dump of English. The provider URL displays 200 GB of public mail archives from Apache (developer of MXNet machine learning framework). 11 GB of Amazon product reviews, 8GB of Reddit comments, and an additional list of Big Data providers, some of which are featured here.

Table 2. Providers of Economic Datasets

| Quandl | Economics | GDP growth rates and other global financial datasets |

| US Census | Factfinder | Community economic characteristics |

| Data.gov | Resource Hubs | USGS Biodiversity Information and Stats |

| Socrata | Starbucks GPS | USA Starbucks franchise location dataset |

| Github | Reuters NLP | 40 GB of news stories for natural language processing |

Looking back at the BOC site where we downloaded the CSV, we notice that the site contains a lot of tools for visualizing available data. This currency site has animated and interactive charts that are useful for studying data prior to drawing conclusions and making assertions. You can also be certain that data elements are less likely to be missing when the set is actively maintained by the provider. Sites like this are ideal when you know exactly what data you’re looking for. When you want to browse for fascinating datasets that you didn’t even know existed, on the other hand, that’s when providers like AWS never fail to amaze. With these ideas in mind, let’s return to curating our list of Big Data providers.

Requires a quick sign-up to join the community of providers. Data World is an enormous provider of datasets ranging from agricultural market data to sports and travel statistics. In this Commodity Index Report, a variety of data tags convert easily to features like soy market data by trade date. The method of access is by CSV download.

Although NSF’s datasets are hosted by the clearinghouse data.gov, this extraordinary resource needs a particular mention because ALL of the science-related data curated by US government agencies are distributed first by NSF. This dataset is an XML file containing funding rates for competitive research proposals. NSF’s Developer Resources site contains APIs and query tools for accessing all data mandated by the 2013 OMB.

Open Data Catalog is an immense collection of datasets provided as public by the World Bank. A major inventory of economic and financial forecasting datasets are available here. This “Evaluation Microdata Catalog” provides access to data curated during impact evaluations conducted by the World Bank. API and CSV download are the methods of access.

CDC is the most important agency in the world for tracking and monitoring pathogen origin and effects. A variety of data on Birth, Period Linked Birth – Infant Death, Birth Cohort Linked Birth – Infant Death, Mortality Multiple Cause, and Fetal Death data files are available for independent research and analyses.

The IMF publishes a range of time series data on IMF lending, exchange rates, and other economic and financial indicators. This WEO database contains selected macroeconomic data series from the statistical appendix of the World Economic Outlook report. CSV download is the main access method.

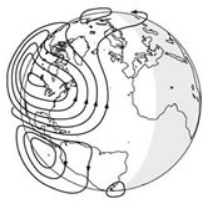

We now demonstrate a very popular methodology in machine learning to extract a pattern from one of our featured datasets. We will use the UCI curated ionosphere dataset in item 34. below to determine if the signal data collected from antennae show a pattern suggesting a structure in the ionosphere of Earth. The code sample is strongly commented with explanatory text explaining every step in the process.

We now demonstrate a very popular methodology in machine learning to extract a pattern from one of our featured datasets. We will use the UCI curated ionosphere dataset in item 34. below to determine if the signal data collected from antennae show a pattern suggesting a structure in the ionosphere of Earth. The code sample is strongly commented with explanatory text explaining every step in the process.

In this mini-tutorial, we will demo the machine learning functions provided in the SKLearn Python library to extract and analyze the dataset, predict test values, and calculate the accuracy of our model.

The first step is to download a copy of the CSV file from UCI. Next, open your Python command line and create a new project in your favorite editor. Paste the code below exactly. The only modification you need to make is the path to your downloaded data:

# ByteScout tutorial on SKLearn machine learning

# Python library. Extract and analyze the UCI public

# dataset on the structure of the Earth's ionosphere.

# Here is the standard setup for SKLearn ML functions:

from sklearn.model_selection import train_test_split

import numpy as np

import csv

datafile="ionosphere.data"

X=np.zeros((351,34),dtype='float')

Y=np.zeros((351,),dtype='bool')

# Divide data into two arrays of params (X) and target (Y)

# Pandas also contains functions to divide arrays:

with open(datafile,'r') as input_file:

reader=csv.reader(input_file)

for i, row in enumerate(reader):

data=[float(datum) for datum in row[:-1]]

X[i]=data

# change value 'g' to 1

Y[i]=row[-1]=='g'

print(X.shape),(Y.shape)

# restructure the X params array

import pandas as pd

def rstr(df):

return df.shape, df.apply(lambda x:[x.unique()])

rstr(pd.DataFrame(X))

# Use sklearn minmaxscaler to scale the data features

# to within range 0 to 1 - improves efficiency of gradient

from sklearn.preprocessing import MinMaxScaler

X_transformed = MinMaxScaler().fit_transform(X)

# Split the datasest into two sections, one for training

# and and a second for testing the model 75%train,25%test :

X_train, X_test, y_train, y_test = train_test_split(X_transformed, Y, random_state=14)

print "Samples in training data = ",format(X_train.shape[0])

print "Samples in test data = ",format(X_test.shape[0])

print "Feature count = ",X_train.shape[1]

# Fit K-nearest neighbor model in sklearn

# pattern matching algorithm ML technique

# SKLearn conveniently does all the calc:

from sklearn.neighbors import KNeighborsClassifier

KNeighborsClassifier(algorithm='auto', leaf_size=30, metric='minkowski',

metric_params=None, n_jobs=1, n_neighbors=5, p=2,

weights='uniform')

KNN_io=KNeighborsClassifier()

KNN_io.fit(X_train,y_train)

# Cross validation scoring:

from sklearn.model_selection import cross_val_score

trans_scores = cross_val_score(KNN_io, X_transformed, Y, scoring='accuracy')

average_accuracy = np.mean(trans_scores) * 100

print"Average accuracy = ",format(average_accuracy)

# Run against test data

# and print the accuracy from comparing predicted values

# with the target values in Y test set:

y_predicted = KNN_io.predict(X_test)

accuracy = np.mean(y_test == y_predicted) * 100

print"Accuracy = ",format(accuracy)

Training this KNN model results in an accuracy of about 86% which is not extremely impressive but suggests a reexamination of the theory and dataset. This is nevertheless an excellent and typical example of ML methodology in practical use today.

The example dataset here is an Atmospheric model and contains Ensemble data assimilation for wave analysis, monthly means of daily means, wave data assimilation, and Synoptic monthly means in the collection of wave models. The method of access is via downloadable API.

Fantastic variety of business parameter data for machine learning, including over 1.2 million attributes like hours, parking, availability, and ambiance, aggregated check-ins over time for each of the 174,000 businesses. Method of access is by SQL query, results in JSON and CSV formats.

OpenFDA provides APIs and full sets of downloadable files to a number of high-value, high priority, and scalable structured datasets, including adverse events, drug product labeling, and recall enforcement reports. Start with the API basics page, which provides a great introduction to openFDA and some good pointers on how you can begin developing using the platform. OpenFDA features an open user community for sharing open-source code and examples.

Uber Movement provides anonymized data from over two billion trips to help urban planning around the world. The dataset is public and free with quick email signup. Access is via the Movement API.

This portal provides you with access to the dataset that was used to build a real-time human gesture recognition system. This was described in the seminal CVPR 2017 paper titled “A Low Power, Fully Event-Based Gesture Recognition System.” The access method is API and HTTP requests.

The CREST system is the publicly accessible repository of the CIA record dataset, manually reviewed and released records are accessed directly into the National Archives in their original format. Over 11 million pages have been released in electronic format and reside on the CREST database. Access via advanced search tool and download of CSV.

The advanced physics particle accelerator curates a wealth of data, output from accelerator runs, and results. In this example, subatomic particles, muons, and electrons in PAT candidate format derived from /Electron/Run-2010B-Apr21ReReco-v1/AOD primary dataset are featured. CERN provides multiple access methods including API and SDK.

The example dataset here features MRI images for 539 individuals suffering from ASD (autism) and contains 573 typical controls. The same provider at this link contains an amazing collection of datasets for medical research! Access to various APIs and CSV files, plus images. Peruse the site to get a lot of interesting new data sources!

The National Library of Medicine and the U.S. Government curate these PubMed datasets for free public use. NCBI contains vast datasets on disease and medical research, such as this on cystic fibrosis, Deafness, DiGeorge syndrome, Autism, and Hypertrophic cardiomyopathy datasets: Access through the downloadable API here.

Although the highest quality journal articles and data are paid, Scihub makes them all available free through this portal, via doc ID. This is a fabulous resource that provides access o ALL important academic papers previously limited to paid subscribers. The Wiki link shows a list of URLs for scihub, and you can also get a browser extension to access all the articles. Doc ID numbers have a format like this: D41586-018-02098-8.

Academic Torrents contains a huge collection of datasets, such as this Aesthetic Visual Analysis (AVA), which contains over 250,000 images in 33 GB of data, along with a rich variety of metadata including a large number of aesthetic scores for each image, to be used in training models, and semantic labels for over 60 categories, as well as labels related to photographic style for high-level image quality categorization. Access this data through API and direct image download.

The Common Crawl dataset corpus contains petabytes of data collected over 8 years of web crawling, available for public use. The datasets contain raw web page data, metadata, and text. The DX Server API Reference contains the API with useful instructions and examples. The index is also available in JSON form.

Pew Research Center’s Internet Project offers scholars access to raw data sets from their original research. Datasets are available as single compressed archive files (.zip file) and so queries are performed locally. The example dataset is a survey of user views about qualifying online harassment.

A wide cross-section of topics for machine learning projects is housed and publicly available at Yahoo. Advertising and Market Data, Competition Data, Computing Systems Data, Graph, and Social Data, Image Data Language, Data Ratings, and Classification Data. The sample here contains marketing and advertiser key phrase findings. Access by API.

The Catalogue of Life is the most comprehensive and authoritative global index of terrestrial species currently available online. It consists of a single integrated species checklist and taxonomic hierarchy. The Catalogue holds essential information on the names, relationships, and distributions of over 1.6 million species. Query by HTTP request and API tools available at the site.

Every imaginable dataset describing every aspect of New York City is available in this collection. Featured dataset here is NYC Wi-Fi Hotspot Locations Wi-Fi Providers: CityBridge, LinkNYC 1 gigabyte (GB), (including Free Wi-Fi Internet Kiosks). Access includes MapReduce, JSON, and CSV download, query by API.

KAPSARV is an enormous clearinghouse of data across every topic of life on earth. The Energy category alone contains 83 datasets on renewable sources in China. Access is by API and HTTP requests.

This major portal provides access to Historical Data of 120,000 Macroeconomic Indicators, Financial Data, Stocks and Market Data for 120 Countries, and more than 75,000 stocks, bonds, commodities, and currencies around Global Markets. This example features the very low unemployment rate in Norway, conveniently charted and presented for download. Access via API, web app query, and direct download.

This public dataset is featured in our machine learning tutorial above, and so we will give a complete description here. The dataset contains radar receiver data collected by a system in Goose Bay, Labrador, composed of 16 high-frequency antennas with a total transmitted power on the order of 6.4 kilowatts. See the paper for more details. The targets were free electrons in the ionosphere. Good radar signals are those showing evidence of some type of structure in the ionosphere. Bad signals pass through the ionosphere. Signals were processed using an autocorrelation function whose arguments are the time of a pulse and the pulse number. There were 17 pulse numbers for the Goose Bay system. Instances in this dataset are described by 2 attributes per pulse number, corresponding to the complex values returned by the function resulting from the complex electromagnetic signal. This is an important research dataset and is cited by more than 50 independent research papers. See the tutorial above for more.

Here we have an unusual theme in dataset distribution based on a Wiki-style linked data structure: recommended best practice for exposing, sharing, and connecting pieces of data, information, and knowledge on the Semantic Web using URIs and RDF.

With links to 2,600 plus other data portals, this is a vast warehouse of dataset providers. Map and HTTP query provide access first to the categorical list, and then to a specified dataset. Data portals are listed by country here. Multiple access methods include SQL, CSV download, JSON, and XML formats.

Geo query Dataset categories include Business Data, Restaurant locations, Big Data for Machine Learning, Advertising, and Marketing. The map tool is used to query the geographical datasets.

DataHub initially lists 304 public datasets, including this on Monthly net new cash flow by US investors into various mutual fund investment classes (equities, bonds, etc). Statistics come from the Investment Company Institute (ICI). Data comes from the data provided on the ICI Statistics pages, and the mode of access is a direct download of CSV files.

A collection of datasets arising from large social networks, temporal, citation, community, and many other networking datasets. Ego-Twitter is featured here as a dataset of 81k nodes in the network, Social Circles in Ego Networks. The datasets are available as a direct .txt download.

Wage-related datasets are popular at this large provider of USA economic data. An excellent GUI is provided here for easy access to all datasets. The top picks link demos how to quickly checkboxes of the dataset you want and download them in a variety of formats.

Dartmouth datasets document glaring variations in how medical resources are distributed and used in the United States, including data on hospitals and their affiliated physicians. The featured dataset highlights stats on Medicare payments. The datasets are available to download in CSV.

The main repositories are the Extraction Framework and DBpedia actually hosted on GitHub. The English version of the DBpedia knowledge base currently describes 6.6M entities of which 4.9M have abstracts. The datasets LEXVO are associated with DBpedia in NLP and other CNN methods of machine learning. Access is via many methods, HTTP requests, and API.

FAA makes available a wide range of data in many formats. Our featured dataset from the FAA accident reports includes the July 2017 incident report. And the data, in this case, is audio data for NLP machine learning and other methods of study. The featured data downloads in MP3 format.

Factual provides location datasets and is a company delivering public datasets to achieve innovation in product development in machine learning and data mining, mobile marketing, and real-world analytics. data asset created from over 3 billion references to businesses, landmarks, and other points of interest across more than 100,000 unique sources.

Subsets of IMDb data are available for access to customers for personal and non-commercial use. Each dataset is contained in a gzipped, tab-separated-values (TSV) formatted file. Label data like primary title, tconst, and others are nicely supplied here. Direct download and local queries are methods of access.

ICPSR hosts thousands of datasets and provides a great query engine to get started. Myriad topics are covered. Once the interface and API are mastered, developers can write their own queries. This data collection contains county-level counts of arrests and offenses in the USA. Labels include fraud, embezzlement, vandalism, weapons violations, sex offenses.

The featured MOBIO dataset from DDP here is for NLP machine learning projects. The MOBIO database consists of bi-modal (audio and video) data taken from 152 people. Back up one level to see the full list of datasets provided here in several formats and query options.

A massive scientific research repo, BE contains datasets on sea ice and a thousand other topics related to climate change. Peruse the charted datasets easily and then download the CSV and gz files for analysis in your favorite ML framework.

This GIS dataset contains data on wastewater treatment plants, based on EPA’s Facility Registry Service (FRS), EPA’s Integrated Compliance Information System (ICIS), and other datasets. API is available for queries.

The surveys collect data on labor market activity, schooling, fertility, program participation, health, and much, much more. Dataset here labels over 8,000 participants in research surveys. Features its own tutorials on API access and query methods. Direct link to this set.