Cheatsheets are great. They provide tons of information without any fluff. So today we present you a small cheat sheet consisting of most of the important formulas and topics of AI and ML. Towards our first topic then.

Table of content

Activation functions are kind of like a digital switch that controls whether a specific node (a neuron) in a neural network would ‘fire’ or not. Following are the most commonly used ones:

The sigmoid (aka the logistic function) has a characteristic ‘S’ shape. One of the main reasons why it’s used widely is because the output of the function (range) is always bounded by 0 and 1. The function is differentiable and monotonic in nature. This activation function is used when the output is expected to be a probability. The function is given by,

![]()

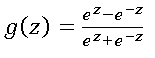

Tanh function (aka the hyperbolic tangent function) is similar to Sigmoid in the sense that it also has the characteristic ‘S’ shape. But the main difference between the Sigmoid and Tanh is that the range of Tanh is bounded by -1 and 1, whereas the range of Sigmoid is bounded by 0 and 1. The function is differentiable and monotonic in nature. The function is given by,

The ReLU (Rectified Linear Unit) is debatably the most widely used activation function. It’s also known as a ramp function. Unlike Sigmoid or Tanh, ReLU is not a smooth function, i.e. it is not differentiable at 0. The range of the function is bounded by 0 and infinity. The function is monotonic and unbounded in nature. The function is defined as,

![]()

Leaky ReLU is a specific version of the ReLU activation function. Leaky ReLU is primarily used to address the ‘Dying ReLU’ problem. The small epsilon value converts the range of the function from (0, infinity) to (-infinity, infinity). Like ReLU, Leaky ReLU is monotonic in nature. The function is given by, with ϵ≪1.

![]()

Roughly speaking, loss functions define how good a prediction is. Given the true value and the predicted value, the loss function gives an output that is congruent to the ‘goodness’ of the prediction.

Depending on the type of the task at hand, the loss functions can be broadly divided into 2 categories: regression loss functions, and classification loss functions.

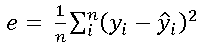

Mean squared error (also known as the MSE, the quadratic Loss, and the L2 Loss) is a measure that defines the goodness in terms of the squared difference between the true value and the predicted value. Due to the nature of the function, the predictions that are far from the actual values are penalized much more severely compared to the values that are somewhat closer. The loss is given by,

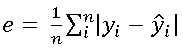

Mean absolute error (or L1 error) is a measure that defines the goodness of the predicted value in terms of the absolute difference between the actual value and the predicted value. As it does not square the differences like MSE it is much more robust to the outliers. The formula is given by,

Cross entropy loss is the most common type of loss function for classification problems. Thanks to its definition, it’s also called the negative log-likelihood error. The characteristic of the cross-entropy loss is that it heavily penalizes the predictions that are both confident and wrong. The loss function is given by,

![]()

Living in the SVMland, Hinge loss is something that you would not come across a lot. In simple terms, the score of the correct class should be greater than the cumulative score of all the other classes by some margin. It’s used for maximum margin classification in the support vector machines. The loss function is defined as follows:

![]()

The whole statistical learning domain could be divided into 3 categories:

The machine learning tasks can be divided into 3 categories – regression, classification, and feature reduction.

Given the training data, the regression task needs you to predict the value of some input features. The point to note here is that the predicted value can numerically be anything.

Given the training data, the classification needs you to assign a feature of value from some predefined values.

Oftentimes your data would have way too many features than you might like. Additionally, more features imply more complex models, which in turn imply lower speed and accuracy. So reducing the number of features in a meaningful way is almost always a good idea.