Rust and Go are both system-level programming languages. They have a lot in common, but their similarities are not critical to the choice! The crucial point in the choice lies in the differences. We want to explore the salient functional nuances of both languages. We want to know exactly why you should choose Rust for your project instead of Go, or vice versa.

What benefits do the architects of Rust and Go intend for us to reap? The answer cannot be discovered simply by inspecting syntax. Ultimately, the real answers to this question touch on the most sophisticated techniques of programming and compiler design!

The important differences between Rust and Go are subtle, not superficial. Now, we are talking about functional nuances called programming paradigms. Paradigms differ in methods of concurrency, memory safety management, type strength, and generics, among other things. On this level, everything important depends on compiler methods of handling paradigms such as concurrency and parallelism. Concurrency in rust vs go is a hot topic indeed!

Check out this video to get the full article in motion:

Concurrency is central to Go programming methodology. Go architects made it easy for developers to write programs to optimize networked and multiple core devices. But the Go compiler is less cautious about thread-safety than Rust. Concurrency in Go is based on the communicating sequential processes (CSP) model, and this is an important theme in the GO paradigm. Here is the quintessential of the Go programming language, concurrency included:

Rust architects endowed their compiler with a novel capability to enforce memory safety called Ownership. The Rust compiler actually tracks the end of scope for pointers and destroys the allocation when the end of the scope is reached. Ownership solves a lot of memory and thread safety problems familiar to C and Go programmers. Here is the list of awesome features built into the Rust compiler:

Not exactly, but before we get into the mechanics of these two paradigm differences, let’s reach a useful conclusion a bit early on. The Go vs Rust choice depends on which of the unique functional features you need most. Your highest priorities in the design functionality of your coding project will decide for you based on the above list! Let’s look at some specific examples to illustrate the nuances shown above.

Before we get into the nuts and bolts, let’s take a moment to clear up some confusion about nomenclature! First, we’re talking about Go programming language. “Golang programming language” refers to the same thing, but it’s not the best way to search. Likewise, “Rust programming language” is the correct usage in searches, and will spare you sifting through hits about a video game!

To begin our deep comparison, we’re going to look at the top distinction on Go’s architect list. We want to explore how Go handles one of the crucial programming methods today: concurrency. The Rust concurrency model is truly innovative. We often use similar terms interchangeably, even though they have distinctly different meaning. Concurrency, parallelism, coroutine, threading, asynchronous – async/await, and other terms involve splitting one process into two or more with a design to gain efficiency in the outcome. The principle of each is similar but the implementations have a unique application. Let’s start with the Coroutine – actually called a goroutine in Golang documentation. Here are some salient aspect of Go:

Go achieves parallelism by communicating state through channels rather than through yield operators. A simple example of a coroutine is a generator with a yield operator which serves these functions:

C provides the async/await functionality to abstract a state machine. This process will run until blocked, whereupon control is passed to the thread pool scheduler. Simple coroutines like this are good in streaming parsers which assimilate input until a desired value or calculation can run. Have a look at this Lua example for comparison:

function myIntegers () local myCount = 0 return function () while true do yield (myCount) myCount = myCount + 1 end end end ... function generateInteger () return resume(myIntegers) end ... genIntegers => 0 genIntegers => 1 genIntegers => 2

Now compare the above yield op with parallelism in a goroutine. In the following example Go replaces a the yield with a channel:

func myIntegers () channel1 int {

yield := make (channel1 int);

myCount := 0;

go func () {

for {

yield <- myCount;

myCount++;

}

} ();

return yield

}

...

resume := myIntegers();

func generateInteger () int {

return <-resume } genIntegers => 0

genIntegers => 1

genIntegers => 2

Continuing on the theme of rust thread concurrency, let’s look at a cutting-edge feature of Rust programming language: how Rust manages high thread safety. In fact, thread-safety was a foundational goal of the Rust language development project! Rust architects describe their “secret weapon” as ownership. In practice, most memory safety and concurrency bugs result when a module accesses memory which should be off-limits! Ownership in Rust is a sort of best practice for memory access control which Rust’s compiler can actually enforce statically for coders! How does it work?

The best possible statement of what Ownership means in Rust comes straight from the architects’ mouth:

“In Rust, every value has an “owning scope,” and passing or returning a value means transferring ownership (“moving” it) to a new scope. Values that are still owned when a scope ends are automatically destroyed at that point.”

The result is an end to dangling pointers and a new level of memory safety in apps. The following code sample shows how Rust automatically de-allocates the memory when control passes out of the owner’s scope:

fn make_vector() -> Vector<i32> {

let mut vector = Vector::new();

vector.push(0);

vector.push(1);

vector // transfer ownership to calling process

}

fn print_vector(vector: Vector<i32>) {

// `vector` parameter is owned by this scope and thus by `print_vector`

for i in vector.iter() {

println!("{}", i)

}

// now, `vector` is now deallocated!

}

fn use_vector() {

let vector = make_vector(); // take ownership of vector

print_vector(vector); // pass ownership to `print_vector`

}

Note that print_vector does not transfer ownership and so the vector is destroyed at the end of its scope! If memory safety is critical in your development then Rust should be on your radar!

Rust achieves concurrency by message passing, where threads are called actors, and they communicate by sending messages about their state. In other words, a novel design feature in Rust is to share memory by communicating rather than communicating by sharing memory. Rust’s implementation of Ownership amounts to a rule which the compiler can check and enforce! However, the channel and transmit API looks a tad cryptic, as shown here:

fn send<T: Send>(chan: &Channel<T>, t: T);

fn recv<T: Send>(chan: &Channel<T>) -> T;

Referring to our vector example above, the following code creates a thread:

let mut vector = Vector::new(); // code here ... send(&chan, vector); print_vector(&vector);

Here, the thread creates an instance of the vector, transmits it to another thread, and then continues using it. The potential hazard lies in the thread receiving the vector, which could mutate it while the caller continues to run. Then the call to print_vector could result in an error or even be exploited as a race condition.

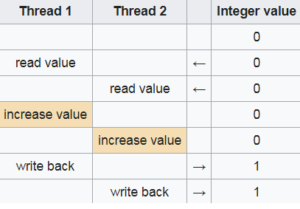

For those who may be new to the concept, threads can interfere with each other if not locked or synchronized correctly. Imagine two threads attempting to increment a global variable, each by adding a value of one. If both run without locking, the outcome could be that both add 1 to zero, instead of adding 1 each in turn, as in the diagram below. Locking and synchronization are implemented in Rust as a natural part of Ownership, leading to increased thread safety.

Rust provides syntax to develop an algorithm as a generalization or generic, and then specify the types upon instantiation. In Rust, this is called generics as usual. Go is more circumspect on generics, calling one a closure. And Go developer communities suggest alternatives. Interfaces, for example also serve the purpose of developing a model in generic form. Reflection is yet another paradigm along similar lines. Reflection works with objects even when their types are not available at source compile time.

Rust generics look like this in simplest form:

fn generic_Fname<T>(x: T) {

// Code using `y` ...

}

This setup defines two purposes. <T> declares a function of one type T, and ‘y’ is of type T. We can also store a generic type using a struct:

struct myPoint<T> {

x1: T,

y1: T,

}

Which can later be used with multiple types such as:

let integer_origin = myPoint { x1: 0, y1: 0 };

let float_origin = myPoint { x1: 0.0, y1: 0.0 };

Probing through our list of exciting Rust and Go functionality, we now come to the important subject of integration with other languages. Go integrates smoothly with C, and many packages are available to support the codevelopment. Here is an example Go segment making use of the C standard library:

package rand

/*

#include <stdlib.h>

*/

import "C"

func myRandom() int {

return int(C.random())

}

func mySeed(i int) {

C.srandom(C.uint(i))

}

Rust uses type classes called “traits.” Rust also supports type inference. Variables declared with the let syntax embody this paradigm. Much of the Rust functionality amounts to innovations on the Haskell programming language. The purpose of type classes is standard ad-hoc polymorphism. This can be accomplished by defining type variable declarations with constraints. This paradigm flows nicely with generics. Have a look at this code example using traits in Rust:

trait myPrintDebug {

fn my_print_debug(&self);

}

Introduce a trait with the &self syntax. Now we can extend types to print a value after instantiating, beginning with a struct:

struct myTest;

impl PrintDebug for myTest {

fn my_print_debug(&self) {

println!("{:?}", self)

}

}

// to print debug values...

fn do_a_segment<T: myPrintDebug>(value: &T) {

value.my_print_debug();

}

fn main() {

let test = myTest;

do_a_segment(&test);

}

A distinction here is that variables do not necessarily need an initial value assignment (which would otherwise cast the type). If a variable is assigned multiple occasions then it must be marked as mut.

In addition to being syntactically similar to C++ Rust is also similar to C++ in performance benchmarks. Rust system requirements and Go system requirements depend on the application to under development and intended scalability. The innovative type system implemented in Rust has the particular goal of supporting coding paradigms like C++ but with increased enforcement of memory safety. The accomplishment retains competitivity with most C++ code in terms of performance, while at the same time guaranteeing memory-safety

We’ve compared the mechanics in our Rust vs Go survey. Now, let’s have a look at what developers are doing with these tools today. Mozilla was the original underwriter of Rust, and so it’s not surprising that the Servo browser engine was built with Rust.

Servo is an advanced parallel browser engine, sponsored by Mozilla, which is currently under community development with Rust programming language. Among the goals of the Servo project which specifically leverage the concurrency and ownership advantages of Rust are:

What does the Go Language have to compare with this? There is no shortage of success stories here! In fact, as Google’s premier language, Go is currently in use in thousands of system level applications globally. For example, Golang’s greatest strength is web application tool development. This includes Go’s concurrency primitives and garbage collection, which make memory management easier than C.

One of Go’s biggest success stories is that it was used to build the wildly popular Docker platform! Docker is popular for operating applications in virtual spaces for testing, continuous deployment, and distributed systems. The use of virtual operating systems highlights Go’s system programming strengths. That’s it for Go news today, so let’s wrap up with Rust vs Go insight…

The best plan to explore Rust system programming is with a clear goal to expand your knowledge of coding paradigms which may be new to you! In other words, we developers sometimes get into comfortable habits. But the world of programming expands dramatically. We must be tenacious and intrepid learners to stay on the competitive edge. Rust and Go are both open source. There is vast community developer interest in both. So, you will find lots of new ideas to explore. In the meantime, check in with ByteScout for the next developer tutorial. Stay with us and stay in the loop!