This article explains how to detect fake currency notes via machine learning using the Python Scikit-learn library. Depending upon different features of a currency note, the machine learning model developed in this article is able to predict whether or not a currency note is fake.

Execute the following commands on your command terminal to download the Python libraries that you will be using in this article:

pip install pandas pip install numpy pip install -U scikit-learn pip install matplotlib

Execute the following script in a Python editor of your choice to import the classes and modules from the libraries that you just downloaded.

import pandas as pd import numpy as np from sklearn.linear_model import LogisticRegression from sklearn.model_selection import train_test_split from sklearn.metrics import accuracy_score import matplotlib.pyplot as plt %matplotlib inline

Machine learning models learn from the dataset. The dataset contains features and output labels. Machine learning models learn the relationship between features and output labels. The dataset that you are going to use to train the machine learning model is available freely here.

The dataset contains information about images of banknotes and corresponding labels that denotes whether banknotes are fake or not. The features available for a banknote image are variance, skewness, kurtosis, and entropy. The label has two possibilities: 0 or 1, where 0 stands for an original note and 1 denotes a fake note.

The read_csv() method of the Pandas library can be used to import the CSV file that contains the dataset as shown in the following script. The following script also prints the first five rows of the dataset using the head() method.

#importing dataset

dataset = pd.read_csv("https://raw.githubusercontent.com/leducsang97/Detecting-counterfeit-currency/master/banknote.csv")

dataset.head()

Output:

| variance | skewness | kurtosis | entropy | class | |

| 0 | 3.62160 | 8.6661 | -2.8073 | -0.44699 | 0 |

| 1 | 4.54590 | 8.1674 | -2.4586 | -1.46210 | 0 |

| 2 | 3.86600 | -2.6383 | 1.9242 | 0.10645 | 0 |

| 3 | 3.45660 | 9.5228 | -4.0112 | -3.59440 | 0 |

| 4 | 0.32924 | -44552 | 4.5718 | -0.98880 | 0 |

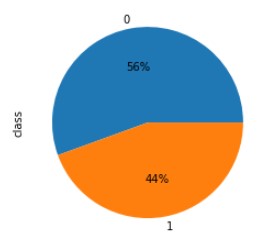

Let’s plot a pie chart that shows the ratio of fake and original currency notes in the dataset. Execute the following script.

dataset['class'].value_counts().plot(kind='pie', autopct='%1.0f%%')

Output:

The above pie chart shows that 56% of the currency notes are genuine while the rest of 44% of currency notes are fake.

Since machine learning models find a relationship between features and labels, we need to split our dataset into feature sets and label sets. The class attribute represents the label in the dataset. Therefore, to create a feature set we remove the class attribute, and to create the label set we filter the class attribute and remove all the remaining attributes. In the following script variable X contains features and variable y contains labels.

#divide data into features and label set X = dataset.drop(["class"], axis = 1) y = dataset.filter(["class"], axis = 1)

The following script divides the dataset into a 75% training set and a 25% test set. Machine learning models are trained on the training set while the performance of the machine learning model is evaluated on the test set.

from sklearn.model_selection import train_test_split X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.25, random_state=42)

Before a machine learning model is actually trained, it is a very good practice to scale the features so that all the attributes have feature values within the same range. The StandardScaler class from the sklearn.preprocessing module can be used for feature scaling as shown in the following script.

#applying scaling on training and test data from sklearn.preprocessing import StandardScaler scaler = StandardScaler() X_train = scaler.fit_transform(X_train) X_test = scaler.transform (X_test)

Once the data is divided into training and test set and has been scaled, you are ready to train your machine learning models. Several machine learning algorithms exist in the Python Scikit Learn library which you can use to train machine learning models for the classification models. A complete list is available at this link. You can use any classification model from this link. In this article, you will use the Logistic Regression algorithm. To train a model, you have to pass the training features and labels to the fit() method of the LogisticRegression class object as shown in the following script. To make predictions on the test set, you can use the predict() method.

#importing logistic regression model from sklearn.linear_model import LogisticRegression #training the logistic regression model classifier = LogisticRegression() classifier.fit(X_train, y_train) #making predicions on test set y_pred = classifier.predict(X_test)

To see how well your machine learning performs on the test set, the final step is to evaluate your model performance. To do so, you can use accuracy as a metric. Accuracy divides the number of correct predictions made by your model by the total number of records in the test set. To find accuracy, you can pass the actual labels and the predicted labels to the accuracy_score() method of the sklearn.metrics module. This is shown in the following script:

print(accuracy_score(y_test, y_pred))

Output:

0.9795918367346939

The output above shows that our model performance is 97.95% which means that our probability of our machine learning model for correctly predicting whether or not a bank is not fake is 97.95%. Impressive? No?

Let’s now see how you can make predictions on a single banknote instead of a complete test set. To make a prediction on an individual banknote, you need values for variance, skewness, kurtosis, and entropy of the image of the banknote. Let’s print the feature values for the 50th banknote in the dataset.

dataset.loc[50]

Output:

variance 4.3239 skewness -4.8835 curtosis 3.4356 entropy -0.5776 class 0.0000 Name: 50, dtype: float64

From the above output, we know that this banknote is not fake since the value of the class attribute is 0.

To find the label for the 50th note in the dataset using the trained machine learning model, you need to pass the information of the 50th note in the dataset to a standard scaler which scales your feature set. Next, you need to pass the scaled dataset to the predict() method of the model that you already trained. Look at the following script for reference.

single_record = scaler.transform (X.values[50].reshape(1, -1)) #making predictions on the single record fake_note = classifier.predict(single_record) print(fake_note)

Output:

[0]

The above output shows that our banknote is not fake which is the correct output.